AI is no longer a futuristic experiment in cybersecurity; it has become our reality. Cybersecurity teams now spot threats faster, respond more effectively, and keep pace with attackers who are already using AI to work at lightning speed. The real advantage comes when AI is paired with human judgment, giving organizations defenses that are smarter, quicker, and resilient enough to handle an evolving threat landscape.

AI in cybersecurity refers to the application of artificial intelligence technologies such as machine learning, large language models, and automated systems to detect, prevent, and respond to cyber threats. It analyzes massive amounts of security data, spots patterns humans would miss, and automates threat detection and incident response. Organizations use it to strengthen defenses, cut response times, and keep pace with increasingly sophisticated attacks.

Why AI in Cybersecurity Matters Today

Security teams used to keep up. Analysts reviewed alerts, wrote detection rules, and investigated incidents at a pace that matched the severity of the threats they faced. When attacks followed predictable patterns and moved at human speed, this approach worked.

Then the balance shifted.

Modern attackers deploy automation that launches thousands of phishing attempts in a single afternoon, adjusts tactics based on what's working, and scans for vulnerabilities faster than any team can manually monitor. Meanwhile, security operations centers process an avalanche of data, including logs, alerts, and signals that grow larger every quarter. Somewhere in all that noise, real threats are moving. Very quickly.

This is where AI in cybersecurity changes the equation. It sifts through the overwhelming volume at machine speed, learns what normal looks like across your environment, and flags the deviations that actually matter.

To understand why this shift is so important, it is helpful to examine how cybersecurity has evolved to this moment.

How We Got Here

Cybersecurity didn't always lean on AI. It started in the late 1980s with rules-based systems: tools that triggered alerts when specific conditions were met. They worked well for known threats, but hit a wall when attackers did something new. The rules couldn't adapt, and security teams spent their time constantly updating them.

The early 2000s brought a shift. Advances in machine learning in cybersecurity allowed systems to do something different: learn from large datasets to understand typical traffic patterns and user behavior across an organization. Instead of just matching predefined rules, these models recognized when something deviated from the baseline. If a user suddenly accessed files they'd never touched before, or network traffic spiked unusually, the system noticed.

Traditional AI methods (supervised learning for malware classification, unsupervised learning for anomaly detection) became standard tools. They automated repetitive tasks like spam filtering and reduced the manual workload for security professionals. But even these improvements struggled to keep pace with increasingly sophisticated attacks.

The latest wave includes generative AI and agentic systems. Generative models enable security teams to interact with their tools using natural language, making it easier to explore specific questions without needing to master complex query languages. AI-powered agents now work alongside analysts, automating high-volume processes and freeing up human expertise for decisions that actually require it.

Each evolution responded to a gap the previous generation couldn't fill: from rigid rules to adaptive learning, from manual investigation to automated triage, from reactive defense to systems that anticipate and respond in real-time.

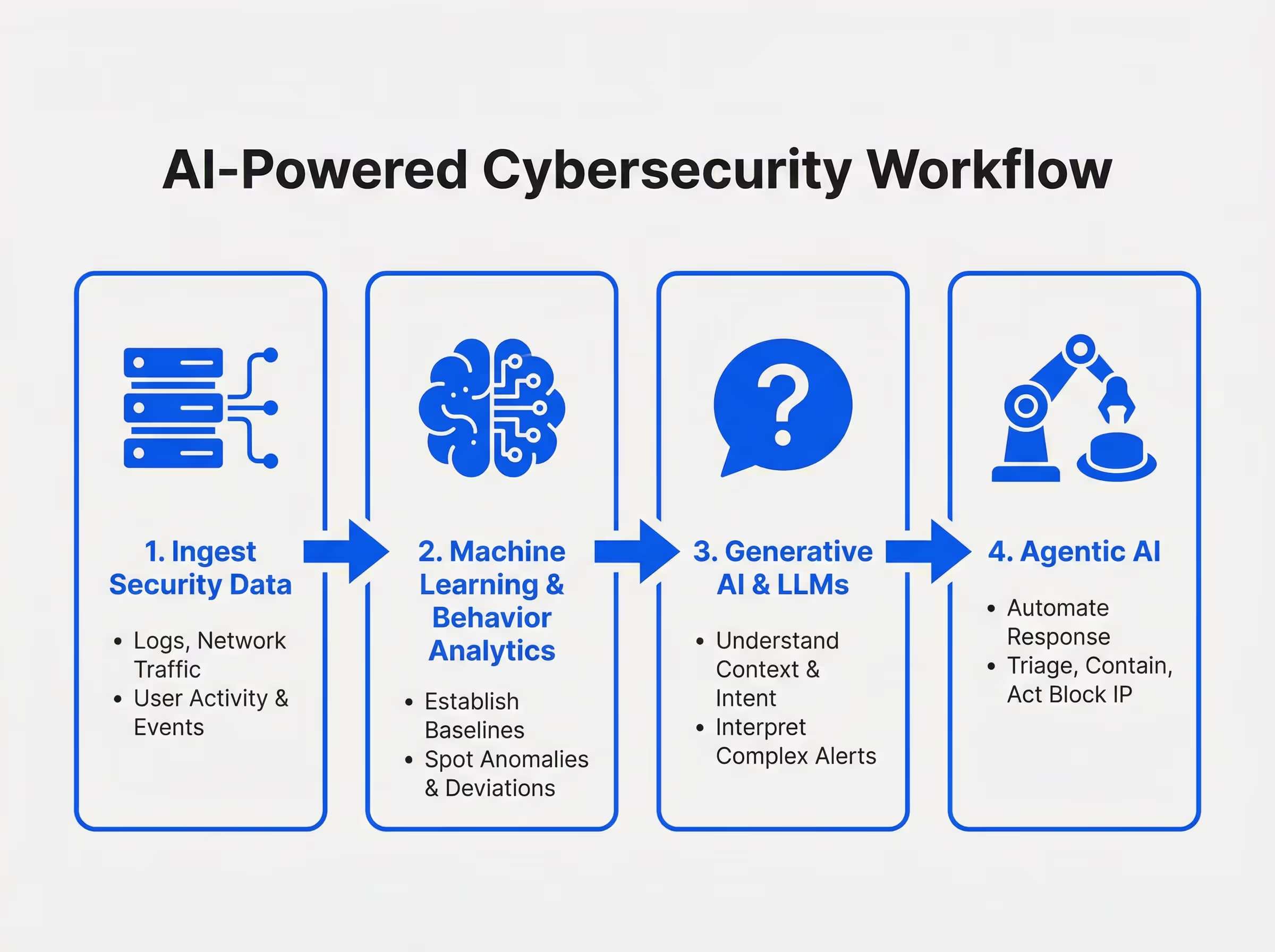

How AI Works in Cybersecurity

Understanding how AI works in cybersecurity begins with one idea. Security is now too fast, too noisy, and too complex for humans to handle alone. AI steps in as an analytical engine that learns from data, adapts to behavior, and responds at machine speed. Below is a breakdown of the core technologies that power AI in cybersecurity and how they function behind the scenes.

Machine Learning: The Foundation of AI Security

At the heart of most AI-driven systems is machine learning. It analyzes enormous amounts of security data and learns what “normal” looks like inside an environment. Once it understands the baseline, it can spot anything that falls outside the pattern.

How Machine Learning Helps Defenders

- Detects anomalies that rule-based tools overlook

- Identifies early signs of compromise

- Reduces alert fatigue by filtering out noise

- Continuously improves as it processes more data.

Types of Machine Learning Models Used in Cybersecurity

Supervised Learning

These models learn from labeled data. For example, they distinguish between known safe activity and known malicious behavior. They are handy for detecting malware and filtering spam.

Unsupervised Learning

These models learn without labels. Instead, they look for unusual behaviors such as a user downloading far more data than usual or a system communicating with unfamiliar destinations. It is ideal for insider threat detection and anomaly spotting.

Reinforcement Learning

This model learns through trial and error, rewarding correct decisions. It is emerging in dynamic environments, such as autonomous threat response, where the system must choose the most effective action without human guidance.

Behavior Analytics: Understanding Normal to Spot Abnormal

Cyber attackers often imitate normal users. That makes signature-based systems easy to evade. Behavior analytics counters this by studying patterns over time, such as how users log in, what files they access, and how data moves across systems. The goal is simple. When something feels “off,” the system raises a flag.

What Data Behavior Analytics Uses

To build a complete understanding of typical behavior, analytics engines draw signals from multiple parts of the environment, including:

- Network traffic

- Database activity

- User activity

- System events

This combination of data provides a full view of what normal activity looks like inside the organization.

How Behavioral Analytics Works

Establishing the Baseline

Behavioral analytics begins by monitoring routine activity. It observes how employees log in, which applications they use, the amount of data they typically access, and when systems communicate with each other.

Over time, this becomes the behavioral baseline. Every future action is compared against this baseline.

Identifying Deviations

Once the baseline is in place, the analytics engine identifies activity that deviates from outside normal patterns. These deviations are measured and scored based on how unusual they are.

This approach is especially effective at identifying:

- Rare login times or unusual locations

- Unexpected spikes in data access

- Irregular movement between internal systems

- Attempts to bypass standard workflows

Attackers may disguise their identities or use legitimate tools, but their behavior often remains inconsistent with typical patterns. This is where behavioral analytics shines.

How Behavioral Analytics Supports Cybersecurity

Behavioral analytics strengthens multiple layers of security. Some of its most critical applications include:

Detecting Insider Threats

Not all threats originate externally. Behavioral analytics can identify suspicious actions from employees or contractors, such as unauthorized data access or unusual system movement.

Detecting Advanced Persistent Threats (APTs)

APTs rely on remaining hidden for long periods. Behavioral analytics detects subtle anomalies that may be the first signs of an infiltrator establishing long-term access.

Anomaly Detection and Threat Hunting

Security teams utilize behavior-based insights to proactively search for threats. A single deviation from the baseline can become the starting point for uncovering a larger issue.

Incident Response and Investigation

After an incident occurs, behavioral analytics provides a clear record of what happened. It helps answer essential questions such as when the anomaly began, which systems were involved, and how the attacker moved through the environment.

Large Language Models and Generative AI

Generative AI and large language models (LLMs) bring a different layer of intelligence. Instead of focusing only on patterns, they work with language, context, and reasoning. This makes them powerful helpers in security operations.

LLMs understand context, interpret language, and reason through complex information that traditional tools treat as static text. Instead of scanning logs line by line, LLMs read them like a human analyst would. They extract meaning, identify relationships, and help security teams move through investigations with far greater clarity.

These models are not just text engines. In cybersecurity, they serve as context engines that enhance the intelligence of the entire security stack.

Smarter, Context-Aware Threat Detection

Traditional detection tools work like security guards following a checklist. They look for exact matches: known virus signatures, flagged IP addresses, or specific attack patterns they've been programmed to recognize. If a threat doesn't match something on the list, it slips through. AI threat detection works differently. Instead of just matching signatures, it understands context. It can examine a log entry (a record of what happened on your system) and recognize when a sequence of seemingly normal events adds up to something suspicious, even if that exact pattern has never been seen before. Large language models take this further by understanding relationships between security events.

An example of this is CYLENS, an LLM-powered threat intelligence copilot that integrates massive databases of known vulnerabilities and automatically connects the dots between new threats and your specific environment. This kind of reasoning helps teams spot what's actually dangerous earlier and focus on threats that matter, rather than drowning in alerts about every possible issue.

User and Entity Behavior Analytics (UEBA)

LLMs also elevate behavior analytics. Because they understand language and context, they can describe why a user’s behavior appears unusual, not just that it is unusual. They can distinguish between harmless anomalies and subtle signs of insider threats or credential abuse.

More importantly, they reduce false positives. Rule-based tools often over-alert. LLMs can explain anomalies and place them within the broader behavior of a user or device, which brings clarity to machine learning driven detections.

Proactive Threat Intelligence

Modern cyber defense demands a proactive stance. LLMs can analyze and synthesize enormous volumes of threat intelligence feeds. They can summarize vulnerability reports, interpret malware behavior, and highlight potential attack paths based on both historical patterns and real-time data.

Instead of teams manually reading thousands of pages, LLMs deliver the insights that matter most.

MITRE ATT&CK Technique Mapping

Security teams use a framework called MITRE ATT&CK (essentially a comprehensive map of how attackers operate) to understand and categorize threats. Matching real-world security events to the right techniques in this framework used to be tedious work. Analysts had to manually review logs, incident reports, and threat intelligence, then figure out which attack technique they were looking at.

LLMs automate this. When an unusual process starts running, or a device connects to a suspicious domain, the model can immediately classify what's happening according to the MITRE framework. This speeds up response time because teams instantly know what type of attack they're dealing with and can follow established playbooks instead of starting their investigation from scratch.

Phishing Email Detection and Response

Phishing remains the most common entry point for attackers. LLMs can parse email tone, sentence structure, intent, and hidden cues within the message. They catch social engineering attempts that evade standard filters, and they can suggest responses or even generate safe communication templates for users.

Agentic AI Systems: Autonomous Cyber Defenders

Agentic AI represents the next major evolution of AI in cybersecurity. These systems are powered by LLMs and advanced reasoning models that can plan, decide, and take action within defined boundaries. Instead of waiting for human direction, they move like autonomous junior analysts who can work through routine tasks at machine speed.

In cybersecurity, agentic AI can investigate alerts, draft incident notes, analyze patterns, and even suggest containment actions. With continuous feedback, these agents become more precise over time. They are not designed to replace human analysts. Instead, they act like Tier 1 SOC support running on autopilot, handling the repetitive work that often slows teams down.

What Agentic AI Can Do Today

- Triage and classify alerts

- Investigate low-level incidents

- Map suspicious behaviors to MITRE ATT and CK techniques.

- Recommend initial containment steps.

- Generate summaries for handoff to human responders.

- Learn from outcomes and refine its decision-making

Agentic AI reduces the operational load on security teams and frees human analysts to focus on higher-stakes reasoning where intuition and experience still matter most.

How These Components Work Together

Cybersecurity rarely hinges on a single tool. Adequate protection emerges from multiple layers working in sync. AI brings those layers together in a way that mirrors how a well-trained SOC team collaborates: fast handoffs, shared context, and coordinated action.

A Simple, Real World Example

It is two in the morning, and a user account that normally logs in during office hours suddenly begins downloading a large volume of sensitive files.

Behavior analytics is the first to react. It compares the activity to the user’s historical patterns and flags the behavior as unusual. Something does not fit the baseline.

Machine learning steps in next. It checks the event against known risk patterns and similar past incidents. Based on those correlations, it elevates the activity to a high-priority alert.

Large language models then interpret the event. They summarize what happened, explain the significance in plain language, and recommend potential next steps for the analyst. They may identify whether the behavior matches insider threat patterns or signs of credential compromise.

Agentic AI closes the loop. Operating within predefined guardrails, it initiates a safe, temporary action such as pausing the session, restricting access, or isolating the endpoint until a human analyst reviews the case.

Each layer reinforces the other. Behavior analytics spots the anomaly. Machine learning quantifies the risk. LLMs add context. Agentic AI takes controlled action.

Together, they create a security system that works faster, smarter, and far more precisely than traditional tools that rely only on static rules or manual investigation.

Key Use Cases of AI in Cybersecurity

Below is a consolidated table that organizes the core applications of artificial intelligence in cybersecurity, including generative AI, machine learning, and agentic AI.

Why These Use Cases Matter

Looking across these use cases, a pattern becomes clear. AI in cybersecurity is not a single tool or feature. It is a layered ecosystem running quietly in the background, learning from behavior, connecting signals, and reacting faster than any human team could on its own. The table shows how each part of this ecosystem handles a specific job. Some models hunt for anomalies. Others interpret intent. Agentic systems step in to take action when the situation calls for speed.

Together, these capabilities form a security posture that feels less like a collection of tools and more like a living system that grows sharper with every event it encounters. This is where the real value of artificial intelligence in cybersecurity begins to show. It improves what teams can see, how quickly they can respond, and how confidently they can protect sensitive data in a world where attackers are evolving just as fast.

Now that we have explored the practical use cases, we can pull the lens back and examine the broader advantages.

Benefits of AI in Cybersecurity

The benefits of AI in cybersecurity go beyond just automating tasks. AI changes how organizations manage risk by providing faster detection, smarter prioritization, and clearer insights across complex environments. Its real strength is the ability to learn continuously, adapt to new threats as they emerge, and process vast amounts of data that would overwhelm even large security teams.

Continuous Learning and Adaptive Intelligence

Unlike static rule-based tools, AI gets smarter with use. Machine learning in cybersecurity establishes what normal looks like in your environment, then spots deviations that signal trouble. The system learns from every threat it encounters, which means attackers can't simply study your defenses and find a way around them. As consequence:

- Detection improves as threats evolve

- It's harder for attackers to bypass defenses

- Better understanding of your threat landscape

Faster Threat Detection and Response

Threats don't wait for security teams to catch up. AI processes massive volumes of network traffic, logs, and user activity in real time, connecting dots that would take human analysts hours or days to piece together. Generative AI in cybersecurity goes further by summarizing what's happening and suggesting immediate steps to contain the threat. For example:

- Catch malware, ransomware, and phishing attempts earlier

- Shrink the window attackers have to operate

- Respond faster with coordinated action

Discovery of Unknown and Emerging Threats

Sophisticated attackers constantly develop new tactics. AI excels at identifying threats that are previously unknown or not yet mapped in traditional security tools. It can detect emerging attack vectors, vulnerabilities, and patterns that might otherwise go unnoticed.

- Identification of zero-day exploits

- Detection of advanced persistent threats (APTs)

- Strengthened proactive defense posture

Enhanced Vulnerability Management

Organizations face an endless stream of potential weaknesses: outdated software, exposed data, and misconfigured cloud applications. AI scans continuously and does something more valuable than just listing problems. It ranks them by actual risk, telling teams which vulnerabilities attackers are most likely to exploit. As consequence:

- Focus shifts to threats that matter

- Less exposure to critical weaknesses

- Faster decisions on what to fix first

Actionable Insights and Simplified Reporting

AI synthesizes data from multiple sources, including internal logs, network traffic, and external threat intelligence feeds. It identifies hidden patterns, correlates events, and produces reports that are clear and actionable. This helps security teams make informed decisions and communicate effectively with other stakeholders. Giving:

- Clear, digestible insights for analysts and management

- Accelerated incident investigation

- Enhanced collaboration across teams

Reduction of False Positives and False Negatives

AI distinguishes real threats from harmless anomalies, reducing alert fatigue. By recognizing patterns and understanding context, it avoids flooding teams with false alarms while still catching critical incidents. Generating:

- Less time wasted on irrelevant alerts

- Higher confidence in detected threats

- More efficient SOC operations

Skills Enhancement for Security Teams

Generative AI translates technical threat data into natural language, helping junior analysts understand and act on complex incidents quickly. It also provides suggested remediation steps, enabling less experienced team members to perform advanced tasks with confidence. The results are:

- Faster onboarding for new analysts who can contribute sooner

- Better productivity across all experience levels

- More consistent responses to threats, regardless of who's handling them

Scalability Across Complex Environments

Organizations today operate across remote offices, multiple clouds, and countless devices. Traditional security tools struggle to cover this complexity, especially as it keeps expanding.

AI scales without breaking. It monitors thousands of endpoints, cloud environments, and applications simultaneously while staying effective. Agentic AI in cybersecurity ensures defenses adapt as threats grow more sophisticated, no matter how large or distributed your infrastructure becomes. Translating into:

- Security that covers your entire system in real time, no matter how large it gets

- Consistent protection as you add new tools, teams, or locations

- Infrastructure that's ready for whatever threats emerge next

Challenges, Limitations, and Disadvantages of AI in Cybersecurity

AI gives security teams real power, but it also introduces problems that traditional tools never had to worry about. The disadvantages of AI in cybersecurity are not abstract ideas. They show up in daily operations, influence how teams make decisions, and shape the risks organizations quietly carry. To use artificial intelligence in cybersecurity responsibly, leaders need a clear view of both its strengths and the shadows it casts.

AI-Powered Attacks

Attackers are not only keeping up. They are accelerating. The same innovations that help defenders detect threats faster now fuel AI cyber attacks that learn, adjust, and move at machine speed. We are seeing autonomous malware that rewrites itself mid-execution, scanners that sweep entire environments in seconds, and worms that propagate too quickly for human intervention. You no longer need a large team or a long timeline to launch a sophisticated attack. A single person with the right AI models can cause real damage.

Adversarial AI and Model Poisoning

AI models are smart, but they are also impressionable. They trust whatever data you feed them, and attackers exploit this with precision. By injecting poisoned data or crafting inputs that trick a model’s logic, attackers can reroute its judgment entirely. One poisoned dataset can teach an AI system to ignore genuine threats. One adversarial input can turn a malicious action into something the model reads as normal. When that happens, the entire security stack starts making decisions on a false foundation.

Sophisticated Phishing and Deepfake Threats

Generative AI is a gift to attackers who rely on deception. Deepfake audio that sounds exactly like your CFO. A video message from your CEO urging a “quick transfer.” Emails that mimic writing style so closely they feel familiar. These attacks work because they no longer look suspicious. They look real. The old advice of “trust your instincts” does not hold up when the attacker can borrow someone’s voice, tone, and timing with perfect accuracy.

Ethical, Bias, and Trust Concerns

AI in cybersecurity also raises a series of human questions.

Algorithmic Bias

An AI model is only as fair as its training data. If the data skews, the model skews with it. In cybersecurity, this can lead to unfairly flagged accounts, misinterpreted workflows, and biased alerting patterns that undermine confidence in the system. Good datasets are not for fancy. They are fundamental.

Transparency and Explainability

Security teams cannot blindly trust a system they cannot explain. When an AI tool flags an activity, teams need to understand why. Without explainability, investigations stall, compliance becomes harder, and accountability dissolves. Explainable AI is not only a technical requirement. It is a governance necessity.

Operational and Human Capital Challenges

AI can automate heavy, repetitive work. What it cannot replicate is human judgment. When teams lean too heavily on automated detection and response, they lose the instincts that make analysts effective. This creates a quiet risk: the team becomes good at using the tool but less capable of catching what the tool misses.

Data Privacy and Compliance Risks

AI needs data to learn and improve, and that creates another layer of responsibility. When models ingest customer data, employee information, or regulated records, organizations must ensure they are not creating privacy violations in the name of security. If training data leaks or is mishandled, the incident becomes both a cybersecurity failure and a compliance issue. The cost of that mistake is rarely small.

AI Strengths and Limitations in Cybersecurity

Preparing for AI-Driven Cyberattacks

AI cyberattacks adapt in real-time, find vulnerabilities at machine speed, and mimic legitimate behavior well enough to slip past rule-based tools. Defending against them requires more than adding another security product to your stack. You need a strategy that combines governance, skilled people, and AI threat detection systems that can match the speed and sophistication of what's coming at you.

Evaluate Third-Party Vendors Carefully

Many attacks originate from external vendors, often through AI-enabled tools or software. To protect your organization:

- Assess each vendor’s AI governance policies and safeguards.

- Review monitoring protocols, drift detection, and contingency measures.

- Include service-level agreements (SLAs) and KPIs to ensure accountability.

- Stay in continuous communication, especially when vendors introduce new features or updates.

Understanding how vendors manage AI helps prevent vulnerabilities from being introduced into your environment.

Secure Internal AI Systems

Organizations building their own AI models face unique challenges:

- Internal governance: Establish clear guardrails to prevent model manipulation, misconfigurations, or poisoning attacks.

- Transparency: Ensure models’ decision-making processes are understandable and auditable.

- Human-in-the-loop oversight: Analysts should validate AI recommendations to prevent hallucinations or exploitation by attackers.

Properly governed internal AI systems protect your organization and provide insight to boards and stakeholders.

Leverage AI as a Defensive Tool

AI can accelerate defense just as attackers use it to launch attacks:

- Quickly correlate alerts and identify the most urgent threats.

- Enable security teams to respond within minutes rather than hours.

- Augment junior analysts by providing actionable insights and recommended remediation steps.

Using AI defensively ensures that security operations can keep pace with increasingly sophisticated AI-powered attacks.

Build Transparency and Explainability

AI systems cannot operate as opaque “black boxes.” Organizations should:

- Maintain clear visibility into AI actions and decision-making processes.

- Ensure boards, stakeholders, and security teams understand how AI affects internal systems.

- Retain accountability: even when AI is deployed, organizations remain responsible for outcomes.

Transparency strengthens trust and ensures compliance with emerging regulatory guidance.

Invest in Cybersecurity Talent

Human expertise remains critical in the AI era:

- Upskill security teams to understand and respond to AI-driven threats.

- Ensure staff can manage AI tools, interpret outputs, and intervene when necessary.

- Recognize that AI complements human analysts rather than replacing them.

The combination of skilled personnel and AI-driven systems provides the strongest defense against sophisticated attackers.

Adopt Frameworks and Best Practices

Organizations can leverage established frameworks to govern AI risk effectively:

- Use guidelines such as NIST’s AI Risk Management Framework to map, measure, and manage AI risks.

- Implement structured oversight, logging, and audit protocols for internal and vendor systems.

- Continuously update policies to reflect evolving AI cyber attack threats and regulatory expectations.

Following structured frameworks ensures that AI adoption strengthens security without introducing unmanageable risk.

Real World Applications of AI in Cybersecurity

AI in cybersecurity is not a distant concept. It is already deeply embedded in the tools and workflows organizations depend on every day. These applications show how machine learning, behavioral analytics, generative AI, and agentic AI help security teams protect digital environments with greater speed and accuracy.

Identity and Access Security

Modern identity systems use AI to evaluate login behavior, device reputation, and contextual risk signals. This helps detect unusual activities such as impossible travel, abnormal login sequences, or suspicious privilege use. AI-driven identity protection strengthens Zero Trust programs and reduces the chance of account compromise.

Email Security and Phishing Prevention

Generative AI has increased the volume and sophistication of phishing attacks. Security tools now use AI to analyze email metadata, writing patterns, tone, and intent. They detect subtle anomalies that traditional filters miss. AI also helps protect against voice and video deepfakes used in social engineering campaigns.

Network and Endpoint Protection

AI-powered systems continuously learn from network traffic and endpoint activity. They detect unusual behavior, identify malware variants, block unknown threats, and predict potential attack paths. This approach is far more adaptive than signature-based tools.

Cloud Security and Misconfiguration Detection

Cloud environments produce huge amounts of activity logs. AI analyzes these logs in real time to detect unsafe configurations, unauthorized access, or risky data movement. This is especially useful in multi-cloud setups where oversight is difficult without automated intelligence.

Threat Intelligence and Attack Prediction

AI helps security teams process threat feeds, correlate global attack trends, and identify patterns linked to emerging threat actors. This allows organizations to predict likely attack methods and prepare defenses before attackers strike.

SOC Automation and Incident Response

Security operations centers use AI to automate alert triage, event correlation, report generation, and early-stage investigations. Agentic AI systems can analyze incidents, recommend containment steps, and take initial action such as isolating a device. This reduces response time and improves efficiency.

Fraud Detection and Transaction Monitoring

Financial institutions use AI to monitor transactions, detect unusual spending behavior, and stop fraud in real time. Machine learning models identify subtle signals that point to fraudulent intent without creating unnecessary false positives.

Vulnerability Management and Patch Prioritization

AI helps identify weak points in digital environments, map the potential impact of each vulnerability, and prioritize the most critical issues. This prevents teams from wasting time on low-risk alerts and increases focus on threats that matter.

Data Protection and Leak Prevention

AI-powered data loss prevention systems classify sensitive information, detect risky user actions, and prevent unauthorized sharing. These systems also identify Shadow AI usage where employees copy or upload sensitive data into public AI tools.

Physical Security and Critical Infrastructure Protection

AI is now used in video surveillance, drone monitoring, and access control. It detects unusual movement, suspicious activity, and safety risks in facilities and high-security environments. Critical infrastructure operators also use AI to monitor industrial control systems and prevent operational disruptions.

The Future of AI in Cybersecurity

AI is steering cybersecurity into a new phase where systems do more of the heavy lifting as teams spend less time chasing noise. Of course, it will not happen overnight, but the shift is already underway. Organizations that move early will operate with more clarity and fewer surprises.

Smarter Security Operations

AI will take over the routine sorting, correlating, and filtering that usually clutters a SOC. Analysts will step into a cleaner workspace where they can think, investigate, and decide instead of wrestling with endless alert queues.

Predictive and Preventive Defense

Instead of waiting for things to break, AI models will help teams see what is coming. They will map likely attack paths, flag emerging weaknesses, and spot fraud before it turns into a reportable incident.

Agentic AI for Deeper Automation

Agentic systems will go beyond quick summaries. They will plan small investigations, test ideas, and carry out multi-step tasks on their own. This gives teams more breathing room for the work that actually moves security forward.

Evolving Deception and Physical Security

AI will strengthen the overlap between digital and physical security. Think adaptive decoys, smart video analytics, and drone surveillance that responds in real time. These systems will make it harder for attackers to tell what is real.

Controlling Shadow AI

As employees pull public AI tools into daily work, accidental exposure becomes a real risk. Organizations will need firm guardrails, better monitoring, and safer internal options to keep sensitive data from slipping out.

Rising Expectations for Governance and Transparency

Regulations such as the EU AI Act are raising the bar on fairness, visibility, and responsible use. Companies will need to show that their AI is explainable, secure, and free of unintended bias, not just powerful.

A New Shape for the Cybersecurity Workforce

AI will not replace security teams. However, it will reshape their priorities. Routine tasks fade into automation, while new hybrid roles emerge for professionals who understand both security and AI.

Conclusion

AI has already changed the cybersecurity landscape in ways that cannot be undone. It works behind the scenes in detection systems, fuels predictive models, strengthens incident response, and helps overwhelmed security teams move from firefighting to forward-thinking. But it also introduces a new layer of responsibility. Attackers now use the same technology to scale their operations, manipulate data, and slip past traditional controls. The balance of power shifts constantly, and the only sustainable strategy is one that blends human judgment with machine efficiency.

Organizations that approach AI with clarity, strong governance, and the right talent will be positioned to thrive even as the threat landscape grows more complex. Contrary to popular belief, the future is not about replacing people with autonomous systems. Rather, it is about letting AI handle the tedious work while security professionals focus on decisions that require nuance, context, and accountability. With thoughtful design, transparent systems, and continuous oversight, AI becomes less of a wildcard and more of a dependable ally.

The path forward is simple. Use AI deliberately. Govern it responsibly. Keep people in the loop. And build a security posture that is prepared for an adversary who is already using these tools. When AI and human expertise work together, organizations gain a level of speed, scale, and resilience that sets a new standard for cybersecurity.

Frequently Asked Questions about AI in Cybersecurity

What is the role of AI in cybersecurity?

AI acts as the force multiplier security teams have been begging for. It sifts through massive volumes of data at machine speed, detects subtle anomalies humans would miss, and brings context to noisy environments. It doesn’t replace strategy, it sharpens it, giving defenders earlier visibility and faster decision-making.

Is AI going to take over cybersecurity?

Not even close. AI can automate repetitive tasks and speed up detection, but it can’t replicate human intuition, ethical judgment, or the subtle contextual calls that make or break an investigation. Think of AI as the world’s fastest analyst, not the CISO. Humans still set direction, validate decisions, and carry responsibility.

What are some examples of AI in cybersecurity?

From phishing detection and anomaly spotting to insider-risk monitoring, threat modeling, triage automation, malware analysis, and behavioral analytics, AI now sits inside nearly every modern security workflow. If it requires speed, pattern recognition, or correlation across fragmented data, AI is likely powering it behind the scenes.

Should companies wait for AI to “mature” before adopting it?

Waiting isn’t a defensive strategy, but an invitation. Attackers aren’t waiting. They’re already using AI to scale reconnaissance, craft tailored payloads, and automate evasion. Organizations don’t need “perfect AI,” they need governed, well-implemented AI that strengthens what their people already do. Maturity comes from using it, not watching it evolve from the sidelines.

Who is responsible when AI gets something wrong?

The organization. AI is a tool, not a decision-maker. Human oversight remains the final layer of defense, validating alerts, interpreting context, and making judgment calls AI can’t. Responsible AI only works when humans stay in the loop and governance is intentional, documented, and enforced.

What is Shadow AI?

Shadow AI refers to the unsanctioned use of public AI tools by employees. It can expose sensitive data, so companies need strong policies and monitoring to prevent accidental leaks

.avif)

.avif)