AI is transforming productivity across every industry—from marketing and design to legal and engineering. But while employees rush to embrace tools like ChatGPT, Gemini, and Microsoft Copilot, many are using other tools without oversight from IT or security. As this grassroots usage grows, so does the volume—and sensitivity—of data flowing into AI tools. Companies now face a critical challenge: how to capture the enormous potential of AI while managing the risks it can introduce to their data.

Much of today’s enterprise AI activity falls under what's known as "shadow AI"—tools and workflows employees adopt on their own to move faster and work smarter. For forward-thinking organizations, this is more than just a security issue—it’s a powerful opportunity. By understanding how AI is being used in practice, companies can identify what’s working on the front lines and scale those successes across the business in a secure, strategic way.

Today, traditional security tools have limited visibility and insight into this new wave of AI tools. They can’t see which tools employees are using, what data employees shared, or the risk profile of individual AI tools based on their security practices and how they handle data. To enable safe, effective AI adoption, organizations need both visibility into usage and control over their data.

What is Security for AI

At Cyberhaven, we’re excited to introduce our latest solution, Security for AI.

It provides continuous visibility of AI usage and enforces risk-based controls so you can get the benefits of AI while keeping your data safe.

At its core are four powerful features:

- Shadow AI Discovery

- AI Usage Insights

- AI Risk IQ

- AI Data Flow Control

Together, these features provide organizations the insights to understand how employees are using AI most effectively while ensuring that risky AI tools neither receive nor leak sensitive data.

Shadow AI Discovery

The first step to AI data security is understanding usage.

Shadow AI Discovery identifies and constructs a registry of AI tools in use. Broadly, the categories of coverage include:

- Dedicated AI tools like Claude and DeepSeek

- Embedded AI within SaaS applications like Notion and Zoom

- AI accessed via corporate email accounts, for example, when someone signs into ChatGPT with their work email address

- AI access via personal email accounts, for example, when someone tries to circumvent controls by registering for ChatGPT via their personal email address

- Existing AI tools including the most popular ones used by your employees

- Emerging AI tools that are new, recently adopted, or relatively unknown

Shadow AI Discovery is designed to uncover all AI tools in use, whether security and IT teams know about them or the tools fly under their radar.

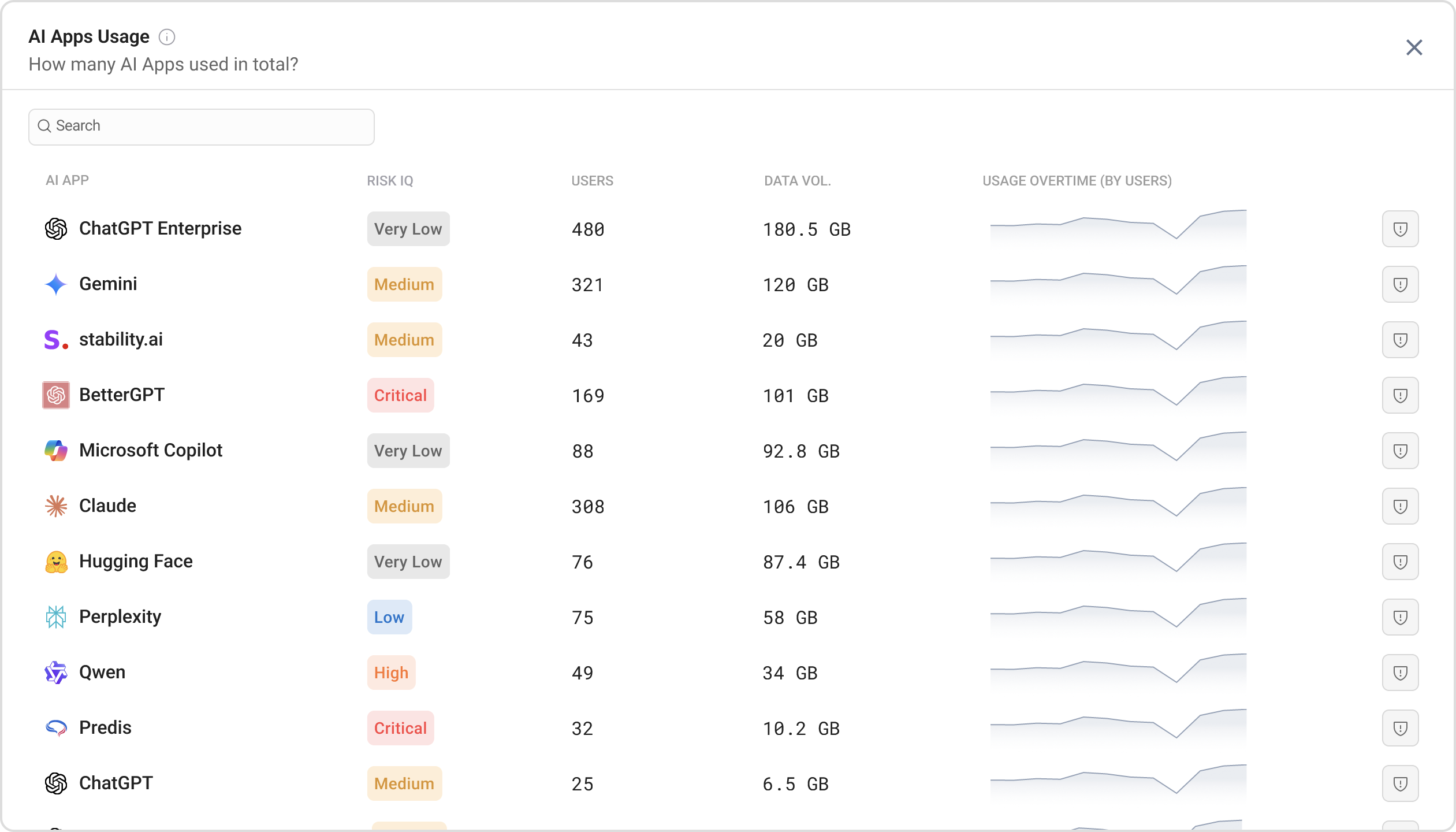

AI Usage Insights

Knowing which tools are in use is just the beginning. You also need to understand who is using them, how they use them, and what data is being shared.

AI Usage Insights gives you a central console to monitor AI activity across the following perspectives:

- Tools: which AI applications are most frequently used and their usage trends

- Users: who are your most active and highest-risk users of AI

- Data: what types of sensitive data employees share with AI and what types of sensitive data AI shares with employees

This intelligence helps security teams stay ahead of AI data security by identifying concerning patterns and applying controls to stop sensitive data leaks. This intelligence also helps IT teams and department managers enable AI adoption by identifying popular tools and the power users who can champion their use.

AI Risk IQ

Not all AI tools pose the same level of risk. Some, like ChatGPT or Gemini, provide transparency and access controls and are operated by well-known companies. But how familiar are you with the other AI tools?

This is where AI Risk IQ can help by providing detailed risk profiles for individual AI tools. Our proprietary system scores each one across five dimensions:

- Data sensitivity and security

- Model security risks

- Compliance and regulatory risks

- User authentication and access controls, and

- Security infrastructure and practices

Our risk scoring system is powered by an agentic AI engine that constructs detailed risk profiles. These risk profiles provide a clear summary of strengths and vulnerabilities with detailed supporting evidence.

AI Risk IQ helps you stay ahead of the growing AI stack adopted by your employees, allowing you to understand what they are and their associated risks.

AI Data Flow Control

Once you understand the risk posed by AI, the next step is to take action to protect your data.

AI Data Flow Control enforces risk-based policies on data ingress and egress.

This feature utilizes Cyberhaven’s powerful Data Detection and Response (DDR) engine to inspect data flowing to and from AI tools. When the system detects sensitive data movement, it triggers actions such as blocking or warning to protect the data.

Whether data is going into AI or coming out of it, Cyberhaven ensures you stay in control.

Key Business Challenges Solved by Cyberhaven's Security for AI

Let’s explore some of the ways your business can benefit from Cyberhaven’s Security for AI.

Uncovering Shadow AI: we deliver unprecedented visibility into all AI across your organization—from standalone applications to AI functionality embedded within SaaS platforms. Our continuous discovery finds every AI tool, whether it's been used for months or was just adopted moments ago.

Stopping sensitive data leaks to high-risk or unsanctioned AI: we offer risk-based controls to manage the data that users share with AI. As AI adoption grows, you can proactively adjust your risk exposure to these tools by monitoring their use and stopping sensitive data from being sent to them.

Limiting exposure of confidential information: AI may inadvertently surface confidential data to unauthorized users. With our solution, you can monitor and control who receives AI responses for HR records, financial data, and proprietary product information. This ensures that confidential content remains accessible only to authorized employees.

Enabling AI adoption: our solution identifies your organization's AI usage patterns and internal champions, allowing IT teams to develop targeted policies and education programs. By monitoring how AI is used and by whom, we help your workforce leverage AI's productivity benefits without compromising security.

Securing Data in an Era of Rapid AI Adoption

As AI adoption accelerates across the enterprise, organizations face a critical inflection point. The question isn't whether your employees use AI—they already do—but whether you'll have the visibility and control needed to securely enable AI tools while protecting sensitive data. Traditional security approaches weren't designed for this new paradigm, leaving dangerous blind spots as employees share confidential information with both established and emerging AI tools.

Cyberhaven's Security for AI offers a comprehensive solution to this challenge. By uncovering Shadow AI usage, stopping sensitive data leaks, and limiting unauthorized exposure of confidential information, our solution helps you navigate AI security and drive AI adoption.

.avif)

.avif)