On July 23rd, the White House released America’s AI Action Plan, a sweeping federal strategy to drive U.S. leadership in artificial intelligence. The message was loud and clear: AI is a national imperative. The plan calls for removing regulatory barriers, investing in infrastructure, and accelerating AI adoption across commercial and government sectors. For data security leaders, this signals a pivotal shift.

A quick summary of the AI Action Plan

"America's AI Action Plan" outlines a federal strategy to accelerate U.S. leadership in artificial intelligence. It focuses on three pillars:

- Boosting AI innovation

- Building infrastructure like data centers and semiconductors

- Strengthening international AI diplomacy and security

It calls for removing regulatory barriers, encouraging rapid adoption in commercial and government sectors, and protecting AI systems from insider threats and intellectual property theft.

What it means for data security leaders

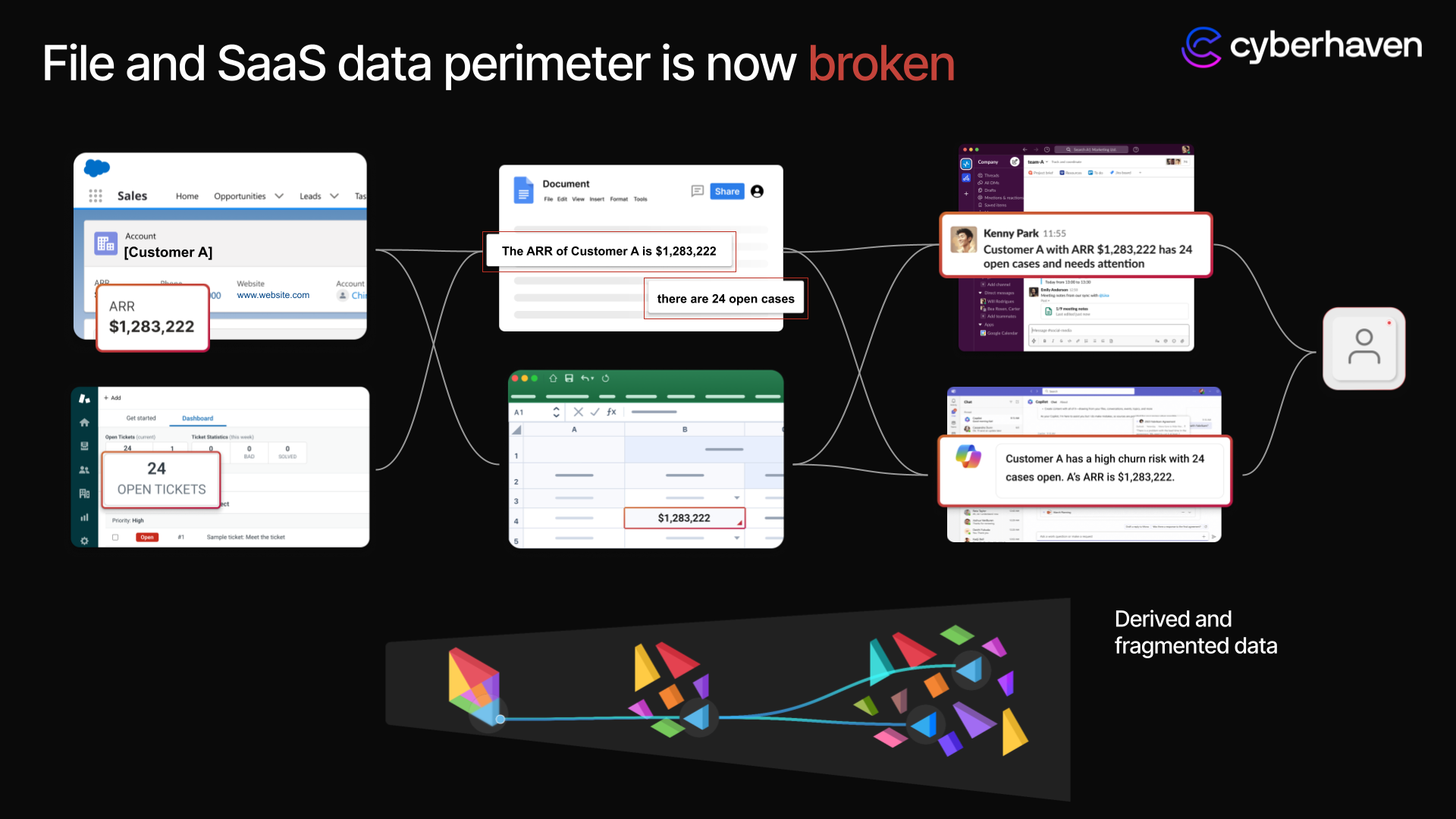

While the headlines may focus on semiconductors, energy demands, and economic growth, the real story for CISOs is what happens to the data that fuels these AI systems. AI doesn’t just process information: it multiplies it, amplifies it, and creates derivatives and fragments of data that flow through organizations faster and faster. The more we use AI, the more exposed our sensitive data becomes.

This creates an urgent challenge: how to embrace the power of AI without losing control of what matters most: your data.

Why data security is foundational to AI

AI systems are only as powerful as the data they’re trained on. Proprietary documents, source code, product designs, and customer information fuel the models driving today’s innovation. That makes your organization’s data strategically valuable. If that data leaks, gets corrupted, or falls into the wrong hands, it doesn’t just pose a privacy risk; it directly undermines your AI investments.

That’s why data security isn’t just adjacent to AI – it’s fundamental. The organizations that protect their data will be the ones able to safely capitalize on AI’s promise.

Key takeaways from the AI Action Plan for data security teams

1. Data protection is increasingly a national security priority.

The AI Action Plan explicitly calls out the need to prevent “malicious cyber actors, insider threats, and others” from stealing or misusing AI innovations. That includes the models themselves and the proprietary data that trains them.

Whether you’re a manufacturer, financial institution, healthcare provider, or software company, your data is now part of the geopolitical equation. If it fuels AI, it must be protected. This aligns with a growing recognition that data security is not just a compliance checkbox – it’s a business and national security imperative.

2. AI is moving faster than policies can keep up.

Federal agencies are being instructed to slash red tape and push AI adoption across critical industries. That same urgency is playing out in the private sector, where teams are experimenting with tools like ChatGPT, GitHub Copilot, and others to boost productivity.

The result? Sensitive data is flowing into generative AI tools at record rates—often through unsanctioned, unmanaged accounts. As noted in our recent “2025 AI Adoption & Risk Report,” AI usage at work has grown over 61x over the past two years. Furthermore, about 35% of the data flowing to AI tools is deemed “sensitive,” and the majority of those AI tools themselves represent “high” or “critical” risk to the data.

Sensitive data flowing to risky AI tools and shadow AI is no longer theoretical: it’s happening now, and teams should take action before the next leak becomes public.

3. Insider risk is growing, and it’s harder to detect.

The AI Action Plan singles out insider threats as a top concern in protecting commercial and government AI innovations. That’s because AI changes the dynamics of insider risk. It’s no longer just about someone stealing a file. It’s about someone copying and pasting sensitive content into an AI prompt, or worse, exposing that data through inadvertent training or surfacing of dark data.

The number of potential egress channels has also increased dramatically. Dedicated AI tools can be accessed via web browsers and desktop clients (making endpoint controls a necessity), but also through embedded capabilities in an ever-increasing number of applications for critical workflows across every department and team.

Security teams need modern visibility into how data is being used, not just where it’s stored.

Why legacy DLP tools aren’t ready for the AI Era

Traditional DLP wasn’t built for fragmented, fast-moving data flows, nor the complexity of AI. These tools rely on brittle policies and static classification that lack visibility into data movement. They miss leaks, generate false positives, and frustrate employees trying to get work done.

In today’s environment, that approach doesn’t just fail to protect data: it creates friction and drives users to work around controls, often turning to shadow AI – unsanctioned AI tools – to stay productive.

Simply put: security teams are stuck chasing alerts while sensitive data walks out the door.

A better approach: reimagined DLP and insider threat protection

It’s time to reimagine data security for the AI era.

At Cyberhaven, we believe that protecting data in the age of AI requires a fundamentally different approach, one rooted in context, precision, and automation.

That’s why we reimagined data loss prevention and insider threat protection from the ground up.

We started with data lineage, which traces where data came from, how it changed, and who used it, across endpoints, apps, cloud services, and AI tools.

Then we added AI-powered capabilities for risk detection, content inspection, prioritization, and incident summarization. This AI-driven detection can identify more sophisticated sensitive data that defies easy pattern recognition by traditional DLP approaches, boosting accuracy and reducing false positives.

Finally, we enabled secure AI adoption by giving teams visibility and control over how data flows into AI tools, whether sanctioned or shadow. Cyberhaven stops risky activity like copying IP into chatbots or exporting customer data to unmanaged devices in real time.

That how we’ve enabled our customers – from those building frontier AI models to those using AI to revolutionize critical processes – to investigate incidents up to 5x faster, resolve incidents up to 2x faster, and cut false positives by up to 90%.

Recommendations for security leaders

In light of the AI Action Plan and the accelerating adoption of AI across every industry, data security leaders should act now to:

1. Map where sensitive data flows into AI tools.

Don’t assume your policies are working. Get visibility into what data is being used, by whom, and in what context. Look for tools that provide high-fidelity visibility across browser activity, SaaS, and endpoints.

2. Focus on context, not just content.

Data lineage reveals intent: Was this an attempt at sensitive data exfiltration, or business as usual? With that context, you can make smarter decisions, reduce false positives, and stop only the behaviors that matter.

3. Empower, don’t punish, your users.

Inline coaching helps users understand risky actions and course-correct in real time. By warning and educating rather than blocking, you reduce shadow IT and build a culture of secure AI use.

4. Consolidate your stack.

Look for a unified platform that combines DLP and insider threat protection with AI-aware controls. Separate tools mean blind spots—and today’s threats cross those boundaries.

The path forward

AI adoption isn’t slowing down. And with government policy now aligned to push it even faster, your data security strategy must evolve too. Cyberhaven helps you stay ahead by securing data wherever it flows, so you can safely embrace AI.

Click below to learn more about AI adoption in the enterprise from our AI Adoption & Risk Report, 2025:

.avif)

.avif)